Before starting to explain the different time complexities, let’s first look at what an actual algorithm is.

The formal definition of an algorithm is “a process or set of rules to be followed in calculations or other problem-solving operations, especially by a computer.”. So in other words, an algorithm is a defined path which is used by, for example the computer, to accomplish a solution to a given problem.

Even though it sounds easy and non-complex, it is quite the contrary. Many algorithms take a huge amount of time to accomplish something whereas some do not. This is why it is very important to know the complexity of a given algorithm. That is, because by knowing the algorithm’s complexity, that would give us the insight of how “valuable” or rather, how efficient the algorithm is.

Examples of algorithms:

- Image search

- Voice Input

- Suggestions

- Google Maps

- Google News

- etc.

Different types of algorithm complexities

-

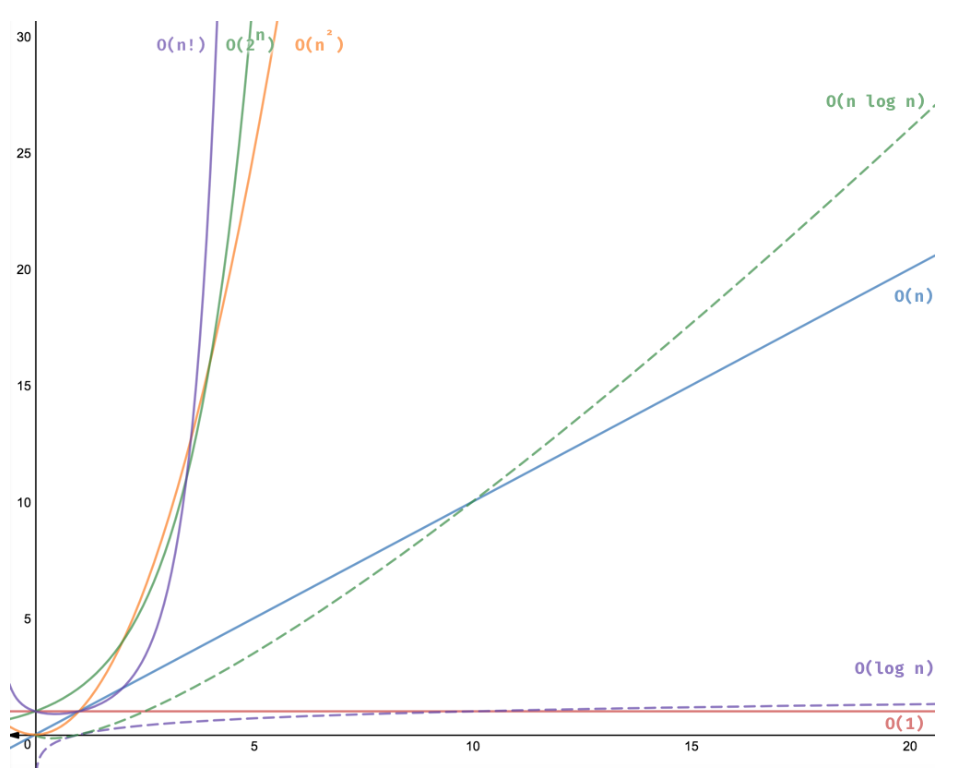

Constant Time: O(1)

If the amount of time does not depend on the input size, an algorithm size is said to run in constant time.

An example of that would be accessing an element from an array. You can access an array’s element simply by “invoking” its index.

-

Linear Time: O(n)

Linear time is when an algorithm depends on the input size. If the input size is n, then the complexity would also be n.

A famous example of algorithm with such time complexity would be the Linear Search.

-

Logarithmic Time: O(log n)

If the execution time is proportional to the logarithm of the input size, then it is said that the algorithm is run in logarithmic time.

A famous example of an algorithm in this time complexity is Binary Search.

-

Quadratic Time: O(n2)

Quadratic time is when the time execution is the square of the input size.

Some examples are bubble sort, selection sort, insertion sort.

-

Definition of “big Omega”

Big Omega, or also known as lower bound, is represented by the Ω symbol.

Big (O)

If the execution time is proportional to the logarithm of the input size, then it is said that the algorithm is run in logarithmic time. For example, if there is an array in Java that consists of 5 apples and you need to print every single apple, that would be O(5) or in other words, O(the length of the array) or O(n).

A famous example of an algorithm in this time complexity is Binary Search.

Big Theta

If T(n) is Θ(f(n)) it means T(n) grows (exactly) as fast as f(n). Also n + n is still n. A bit mouthful isn’t it? Let’s try a big simpler explanation.

You can think of Big Theta as:

“Will take no longer and no shorter than”

How to determine the complexity of a given program

int sumArray(int[] aiNumbers)

{

int iSum = 0;

for (int i=0; i<aiNumbers.length; i++)

iSum += aiNumbers[i];

return iSum;

}

The complexity of this program is simply aiNumbers.length. So if the length of this array is 4, then the complexity is 4. Likely so, if aiNumbers.length is let’s say 6, then the complexity is 6.

The reason the complexity is aiNumbers.length is because it loops aiNumbers.length times. Therefore, the complexity is O(N).

N = in.length;

i = 0;

while (i < N) {

for (int i=N-2; i<N; i++) {

System.out.println("Do something.");

}

}

The complexity of the above program would be N * N which is N to the power of 2. The reason for that is because the for loop will run N times each time, and the whole loop will run N times. Therefore, N * N. Therefore, the complexity of this algorithm is quadratic (O(n2))

for (int i = 0; i < n; i++) {

// do something

}

for (int i = 0; i < n; i++) {

// do something

}

In the example above the algorithm would be in n time complexity. The reason for that is because there 2 loops that loop n times – n + n. n+n in short is just n.

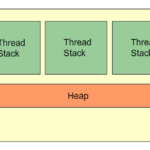

Visual representation of each algorithm

Source to the image: https://adrianmejia.com/most-popular-algorithms-time-complexity-every-programmer-should-know-free-online-tutorial-course/

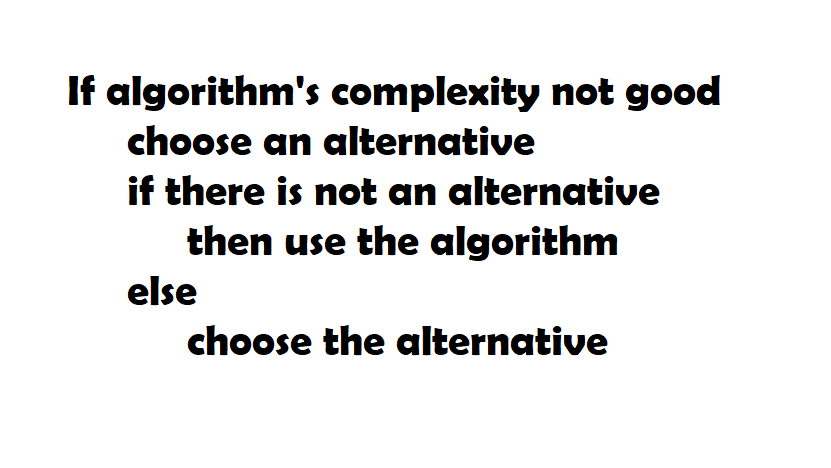

When it comes to algorithms and complexities, always try to omptimize your algorithm, whenever possible. A good way of doing so is to find commonalities in the input data, using collections, etc. Remember: memory is expensive, so is your time.